High-Performance Computing Architecture Design

Design HPC and AI infrastructure starting from workloads, not from hardware brochures.

This service focuses on turning loosely defined requirements into a concrete, testable architecture for HPC and AI clusters. We start with a short discovery of current and expected workloads, including CPU-bound simulations, GPU-heavy training jobs, pre/post-processing pipelines and storage access patterns.

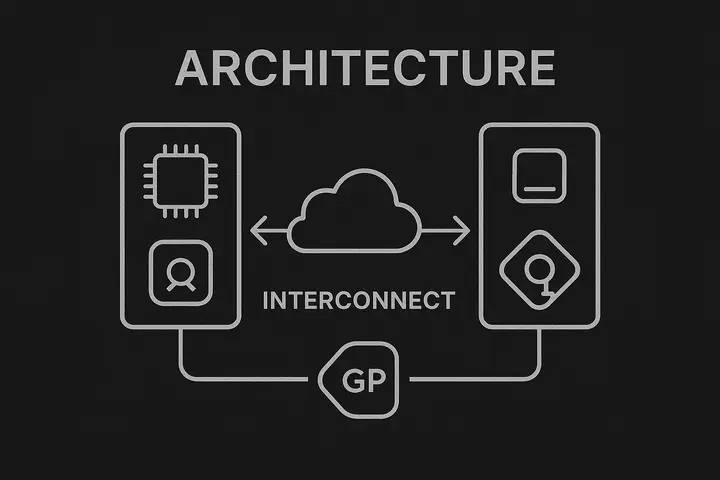

From there, we propose node types, CPU/GPU ratio, memory capacity, NUMA layout and interconnect design that match the actual demand profile. Different network topologies such as fat-tree or dragonfly are evaluated with clear trade-offs in cost, scalability and failure domains.

The result is a concise architecture document that can be used directly in RFPs or procurement, including bill of materials options, capacity projections and design assumptions that can be validated over time.

Case study – From ad-hoc nodes to a coherent cluster

A mid-sized research lab had 6 different generations of servers purchased over several years, with no clear architecture behind them. Users experienced unpredictable performance and frequent contention on a shared NFS server.

After analysing workloads and failure patterns, we proposed a unified architecture that separated interactive, batch and storage roles, introduced a consistent node type for new purchases and re-purposed older hardware for less critical jobs. The new design simplified management, made procurement easier and removed the single NFS bottleneck, reducing support tickets by more than 40%.