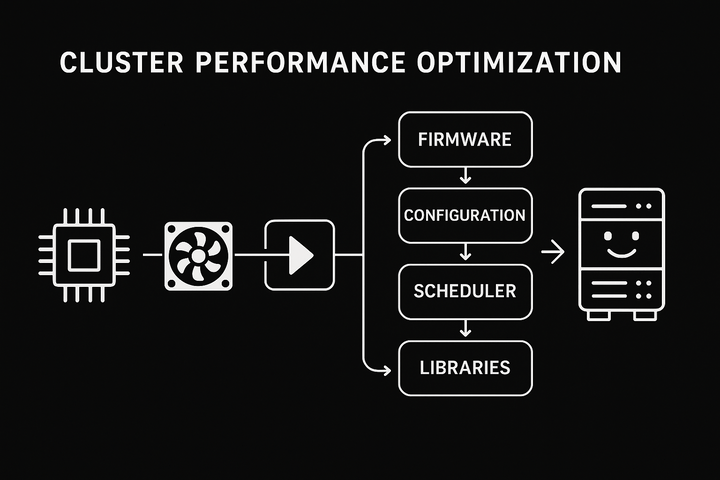

Cluster Performance Optimization

End-to-end tuning of the cluster stack, from firmware and BIOS to scheduler and libraries.

This service is aimed at clusters that are already in production but under-delivering on performance. We take a measurement-first approach based on real jobs, not synthetic micro-benchmarks alone.

We review BIOS/firmware settings, CPU frequency policies, NUMA layout and hugepage configuration. On the software side, we look at MPI configuration, CPU and GPU affinity, container runtimes, file system mount options and SLURM or other scheduler parameters.

Tuning steps are applied in small, controlled batches with before/after metrics. The outcome is a documented set of configuration changes that can be reproduced on new nodes and rolled back if needed.

Case study – 20% more throughput on unchanged hardware

A customer reported that their users were complaining about long queue times despite low measured CPU utilisation. Our analysis showed a combination of suboptimal backfill settings, over-conservative job size defaults and NUMA imbalance.

By adjusting SLURM partition layout, fine-tuning backfill and preemption rules and correcting CPU pinning for common MPI patterns, we increased average cluster utilisation by more than 20% while keeping job slowdown within agreed limits. No new hardware had to be purchased.