Parallel File System Design & Tuning

Design and tuning of Lustre, BeeGFS or Spectrum Scale based on real I/O patterns.

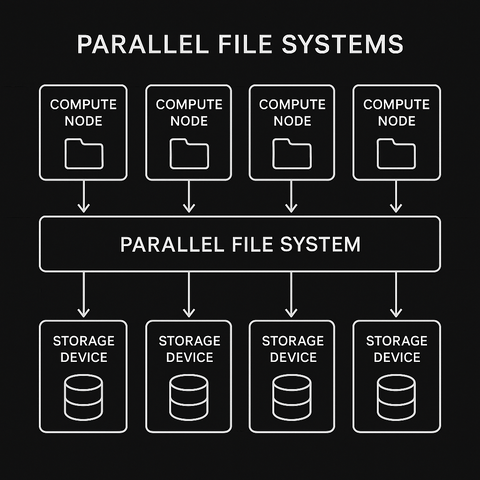

Parallel file systems are often either over-designed and underused, or under-designed and overloaded. This service starts with understanding how applications actually touch storage: small metadata-heavy workloads, large sequential checkpoints, random I/O from data analytics, or a mix of all.

Based on this, we design or adjust the layout of metadata servers, object/storage targets, striping policies and caching behaviour. We look at failure domains, rebuild times and background scanning tasks so that the system remains stable under stress.

Documentation includes recommended monitoring metrics, housekeeping procedures and guidance on when to add more capacity versus when to fix imbalances.

Case study – Stabilising a noisy Lustre scratch

A shared scratch file system backed by Lustre was considered “unreliable” by users because of frequent slowdowns. Investigation revealed a combination of aggressive stripe settings for tiny files and poorly scheduled maintenance jobs.

We introduced sensible defaults for striping, separated metadata-heavy workloads into a dedicated pool and rescheduled background scans away from peak job hours. The result was a much more predictable performance profile and significantly fewer complaints from users.