Data Center Energy Efficiency

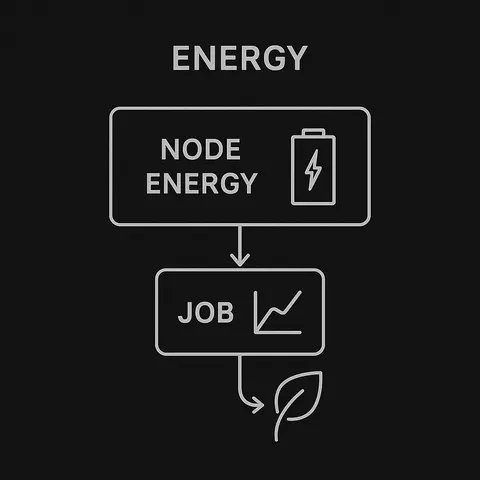

Reduce energy per useful job while keeping performance and reliability.

Energy and cooling costs are now a major part of the total cost of ownership of HPC and AI infrastructure. This service focuses on reducing energy per useful job rather than chasing abstract PUE numbers alone.

We analyse node power profiles, cooling layout, rack placement and utilisation patterns. Techniques include power capping, frequency tuning for specific workloads, thermal zoning and scheduling that respects cooling constraints.

The outcome is a set of practical recommendations together with a simple model of how energy use tracks job throughput, making it easier to justify future efficiency investments.

Case study – Cutting power without hurting throughput

One customer was under pressure to reduce power consumption of a CPU-only cluster. A naive approach of capping all nodes led to unacceptable runtime increases for some applications.

We profiled key workloads and discovered that many were memory-bound and barely affected by modest frequency reductions. By selectively capping certain nodes and directing suitable jobs there, we reduced total energy use while keeping overall throughput almost unchanged.